GRASS: Generative Recursive Autoencoders for Shape Structures

Jun Li1, Kai Xu1,2,3,

Siddhartha Chaudhuri4, Ersin Yumer5, Hao Zhang6, Leonidas Guibas7

1National University of Defense Technology, 2Shenzhen University,

3Shandong University, 4IIT Bombay, 5Adobe Research, 6Simon Fraser University, 7Stanford University

(Kai Xu is the corresponding author.)

ACM Transactions

on Graphics (SIGGRAPH 2017), 36(4)

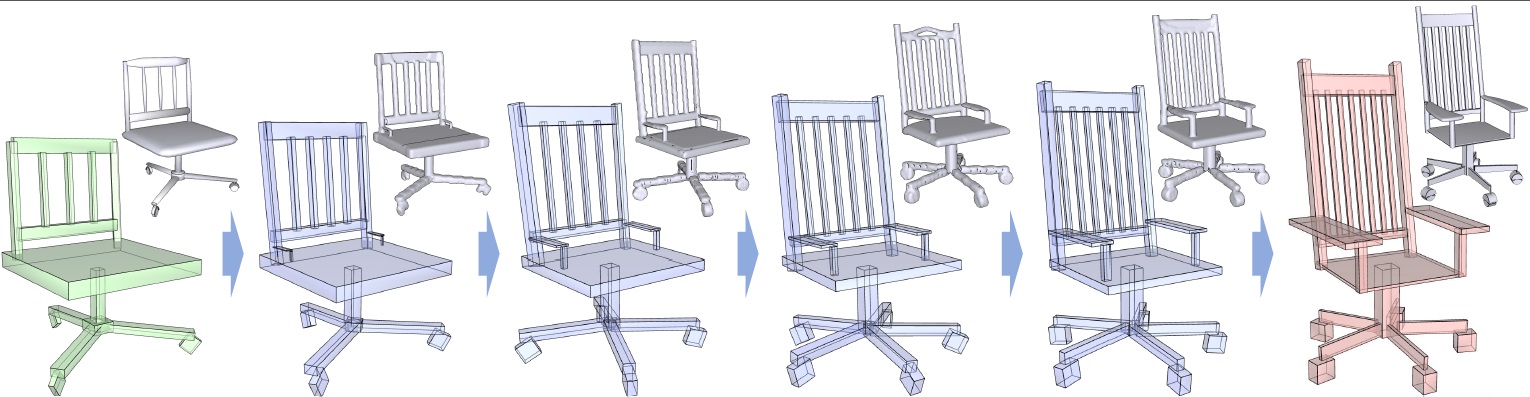

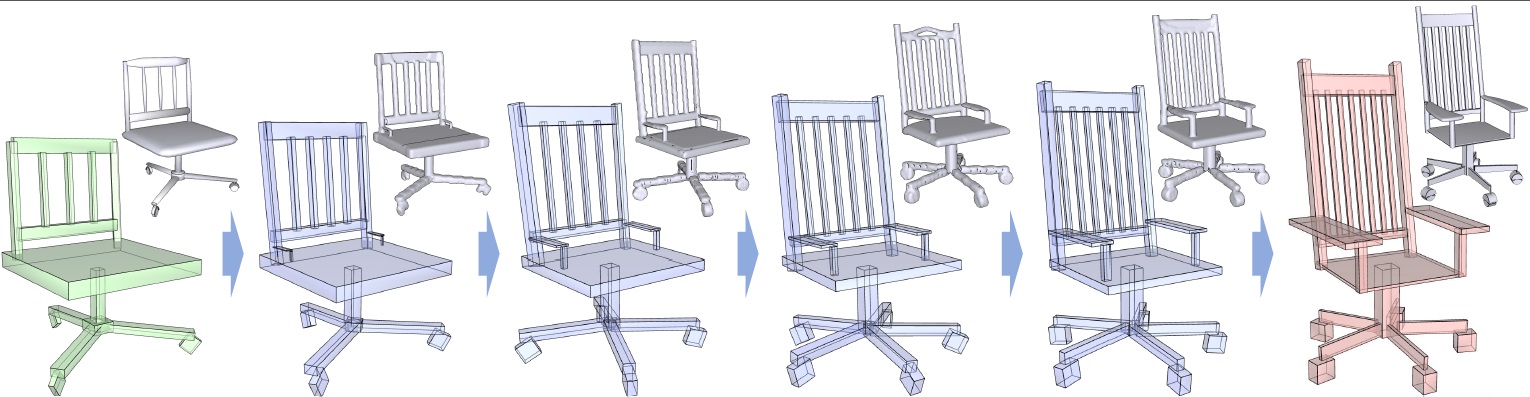

We develop GRASS, a Generative Recursive Autoencoder of Shape Structures, which enables structural blending between two 3D shapes. Note the discrete blending of translational symmetries (slats on the chair backs) and rotational symmetries (the swivel legs). GRASS encodes and synthesizes box structures (bottom) and part geometries (top) separately. The blending is performed on fixed-length codes learned by the unsupervised autoencoder, without any form of part correspondences, given or computed.

|

Abstract

|

We introduce a novel neural network architecture for encoding and synthesis of 3D shapes, particularly their structures. Our key insight is that 3D shapes are effectively characterized by their hierarchical organization of parts, which reflects fundamental intra-shape relationships such as adjacency and symmetry. We develop a recursive neural net (RvNN) based autoencoder to map a flat, unlabeled, arbitrary part layout to a compact code. The code effectively captures hierarchical structures of man-made 3D objects of varying structural complexities despite being fixed-dimensional: an associated decoder maps a code back to a full hierarchy. The learned bidirectional mapping is further tuned using an adversarial setup to yield a generative model of plausible structures, from which novel structures can be sampled. Finally, our structure synthesis framework is augmented by a second trained module that produces fine-grained part geometry, conditioned on global and local structural context, leading to a full generative pipeline for 3D shapes. We demonstrate that without supervision, our network learns meaningful structural hierarchies adhering to perceptual grouping principles, produces compact codes which enable applications such as shape classification and partial matching, and supports shape synthesis and interpolation with significant variations in topology and geometry.

|

|

|

Paper |

|

|

|

Slides |

|

|

|

| Images |

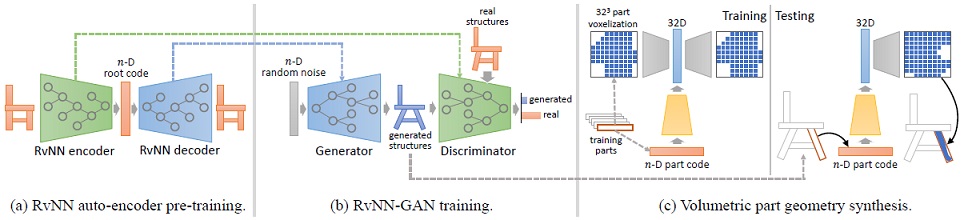

An overview of our pipeline, including the three key stages: (a) pre-training the RvNN autoencoder to obtain root codes for shapes, (b) using a GAN

network to learn the actual shape manifold within the code space, and (c) using a second network to convert synthesized OBBs to detailed geometry.

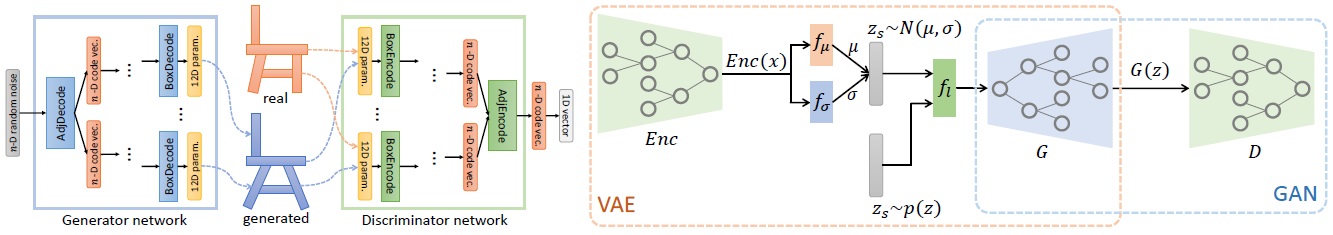

Left: Architecture of our generative adversarial network, showing reuse

of autoencoder modules. Right: Confining random codes by sampling from a learned Gaussian

distributions based on learned root codes. Jointly learning the distribution

and training the GAN leads to a VAE-GAN network.

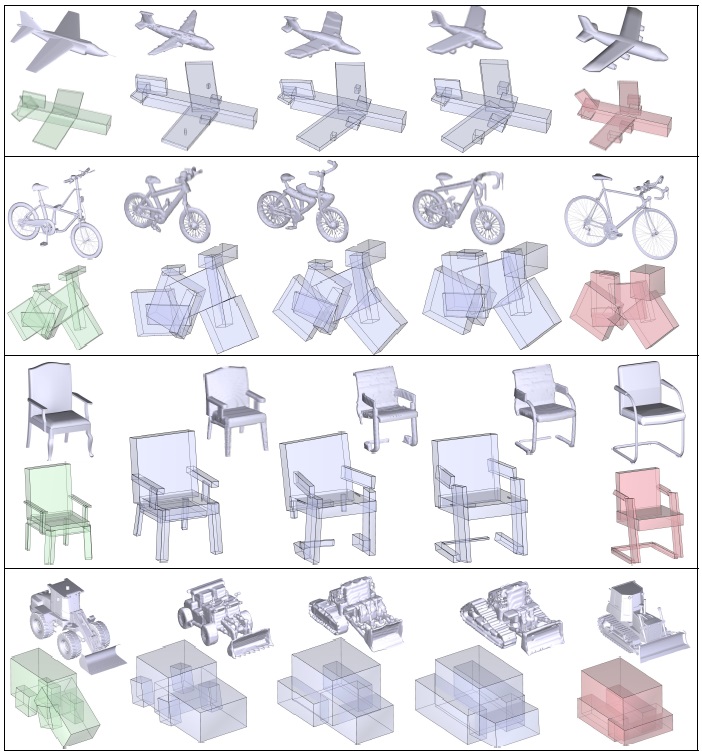

Linear interpolation between root codes, and subsequent synthesis, can result in plausible morphs between shapes with significantly different

topologies.

|

|

|

| Thanks |

We thank the anonymous reviewers for their valuable comments and

suggestions. We are grateful to Yifei Shi, Min Liu and Yizhi Wang

for their generous help on data preparation and result production. Jun Li is a visiting PhD student of University of Bonn, supported by the China Scholarship Council.

This work was supported in part by NSFC (61572507, 61532003,

61622212), an NSERC grant (611370), NSF Grants IIS-1528025 and DMS-1546206, a Google Focused Research Award, and gifts from

the Adobe, Qualcomm and Vicarious corporations.

|

|

|

Code

Data |

We release the source code of training and testing, a trained model, as well as our dataset:

Matlab code (with a sample dataset)

PyTorch code (currently with only the VAE part; thanks to Chenyang Zhu's orginal implementation)

Full dataset (with both raw shape geometry and box structures)

|

|

|

| Bibtex |

@article

{li_sig17,

title = {GRASS: Generative Recursive Autoencoders for Shape Structures},

author

= {Jun Li and Kai Xu and Siddhartha Chaudhuri and Ersin Yumer and Hao Zhang and Leonidas Guibas},

journal

= {ACM Transactions on Graphics (Proc. of SIGGRAPH 2017)},

volume

= {36},

number

= {4},

pages

= {to appear},

year

= {2017}

}

|

|