3D Attention-Driven Depth Acquisition for Object Identification

Kai Xu1,2,3,

Yifei Shi1, Lintao Zheng1, Junyu Zhang3, Min Liu1, Hui Huang3,

Hao Su4, Daniel Cohen-Or5, Baoquan Chen2

1National University of Defense Technology, 2Shandong University,

3Shenzhen University 4Stanford University 5Tel-Aviv University

ACM Transactions

on Graphics (SIGGRAPH Asia 2016), 35(6)

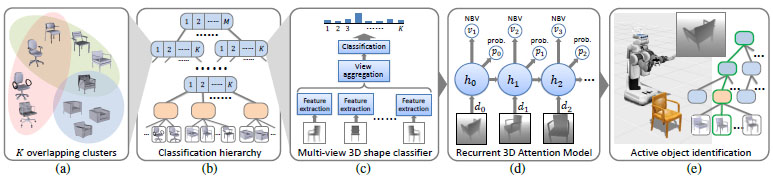

Figure 1: We present an autonomous system for active object identification in an indoor scene (a), with consecutive depth acquisitions, for

online scene modeling. The scene is first roughly scanned, and segmented to generate 3D object proposals. Targeting an object proposal (b),

the robot performs multi-view object identification, based on a 3D shape database, driven by a 3D Attention Model. The retrieved 3D models

are inserted into the scanned scene (c), replacing the corresponding object scans, thus incrementally constructing a 3D scene model (d).

|

Abstract

|

We address the problem of autonomous exploring unknown objects

in a scene by consecutive depth acquisitions. The goal is to model the scene via identifying the objects online, from among a large collection of 3D shapes. Fine-grained shape identification demands

a meticulous series of observations attending to varying views and

parts of the object of interest. Inspired by the recent success of

attention-based models for 2D recognition, we develop a 3D Attention

Model that selects the best views to scan from, as well as the

most informative regions in each view to focus on, to achieve efficient

object recognition. The region-level attention leads to focus-driven

features which are quite robust against object occlusion. The

attention model, trained with the 3D shape collection, encodes the

temporal dependencies among consecutive views with deep recurrent

networks. This facilitates order-aware view planning accounting

for robot movement cost. In achieving instance identification,

the shape collection is organized into a hierarchy, associated with

pre-trained hierarchical classifiers. The effectiveness of our method

is demonstrated on an autonomous robot (PR) that explores a scene

and identifies the objects for scene modeling.

|

|

|

Paper |

|

|

|

Slides |

|

|

|

Video |

|

|

|

| Images |

Figure

2: Our core contribution is a recurrent 3D attention model (3D-RAM) (d). At each time step, it takes a depth image as input, updates

its internal state, performs shape classification and estimates the next-best-view. To make instance-level classification feasible, we organize

the shape collection with a classification hierarchy (b). At each node of the hierarchy, a multi-view 3D shape classifier (c) assigns the

associated shapes into K overlapping groups (a). Online object identification is guided by 3D-RAM while traversing down the hierarchy for

coarse-to-fine classification (e). The feature extractor and classifier of the corresponding node are employed by the 3D-RAM.

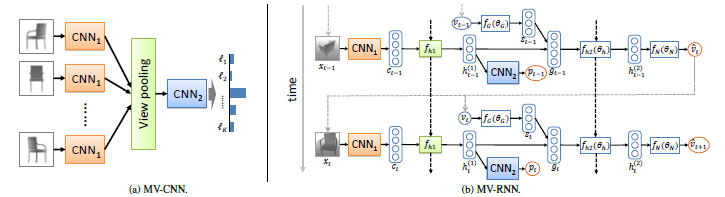

Figure

3: Our recurrent 3D attention model (b) is a two-layer stacked recurrent neural network, with a MV-CNN (a) plugged in. The

subnetworks of MV-RNN responsible for feature extraction, feature aggregation and classification are substituted by CNN1, view pooling and

CNN2 of MV-CNN, respectively. The corresponding subnetworks are shaded in same color. (b) shows a two-time-step expansion of MV-RNN.

Dashed arrows indicate information flow across time steps while solid ones represent that within a time step.

Figure

4: Visualization of identification process and attention (both on acquired depth images and identified shapes) for six real objects.

|

|

|

| Thanks |

We thank the anonymous reviewers for their valuable comments

and suggestions. This work was supported in part by

NSFC(61572507, 61532003, 61522213, 61232011), 973 Program

(2015CB352501, 2014CB360503), Guangdong Science

and Technology Program (2015A030312015, 2014B050502009,

2014TX01X033, 2016A050503036) and Shenzhen Innovation Program

(JCYJ20151015151249564).

|

|

|

| Code |

|

|

|

| Bibtex |

@article

{xu_siga16,

title = {3D Attention-Driven Depth Acquisition for Object Identification},

author

= {Kai Xu and Yifei Shi and Lintao Zheng and Junyu Zhang and Min Liu and Hui Huang and Hao Su and Daniel Cohen-Or and Baoquan Chen},

journal

= {ACM Transactions on Graphics (Proc. of SIGGRAPH Asia 2016)},

volume

= {35},

number

= {6},

pages

= {Article No. 238},

year

= {2016}

}

|

|